Voice AI Latency Benchmarks: What Agencies Need to Know in 2026

Voice AI latency ranges from 300ms to 2,500ms across platforms, with sub-800ms being the threshold for natural conversation flow.

Latency is the single biggest factor determining whether your clients' callers think they're talking to a real person or an obvious robot. When agencies resell voice AI, latency complaints translate directly into client churn. Understanding how different platforms perform under real-world conditions helps you choose technology that keeps clients happy and reduces support tickets.

Which Trillet product is right for you?

Small businesses: Trillet AI Receptionist - 24/7 call answering starting at $29/month

Agencies: Trillet White-Label - Studio $99/month or Agency $299/month (unlimited sub-accounts)

What Is Voice AI Latency and Why Does It Matter?

Voice AI latency measures the time between when a caller finishes speaking and when the AI begins responding. It includes transcription processing, LLM inference, and text-to-speech generation.

Human conversations have natural response gaps of 200-400ms. When AI latency exceeds 800ms, callers notice awkward pauses. Above 1,500ms, conversations feel broken and frustrating. Your clients' customers hang up, miss appointments, and leave bad reviews.

For agencies, latency problems mean:

Increased client support requests

Higher churn rates as clients switch platforms

Difficulty closing new sales when demos feel unnatural

Negative word-of-mouth in your target verticals

How Do Voice AI Platforms Compare on Latency?

Latency varies significantly across white-label platforms due to architectural differences.

Platform | Typical Latency | Architecture | Notes |

Trillet | 800ms-1,200ms | Native platform | Dynamic conversation without flow builder overhead |

Synthflow | 1,000ms-1,800ms | Visual flow builder | Flow processing adds latency on complex paths |

VoiceAIWrapper | Provider-dependent | Wrapper layer | Inherits latency from underlying provider (Vapi/Retell) |

Retell AI | 600ms-900ms | Modular infrastructure | Requires engineering to optimize |

Vapi | 700ms-1,500ms | API-first | Highly variable based on configuration |

The key architectural difference is how platforms handle conversation logic. Visual flow builders like Synthflow process decision trees sequentially, which adds latency when conversations take complex paths. Native platforms like Trillet use dynamic architectures that maintain consistent latency regardless of conversation complexity.

Why Does Trillet Have Slightly Higher Latency Than Raw Infrastructure Platforms?

Trillet's latency is intentionally higher than bare-bones infrastructure platforms like Retell because Trillet includes conversation quality overhead that makes agents sound human rather than robotic.

Every Trillet call includes built-in context that raw API platforms leave to developers:

Date and Time Awareness Trillet agents automatically know the current date, time, day of week, and timezone. When a caller asks "Can I book for tomorrow?" or "Are you open right now?", the agent responds accurately without developers writing custom logic. Raw platforms require you to inject this context manually, or the agent sounds confused about basic temporal concepts.

Graceful Conversation Endings Trillet agents are trained to end calls naturally—acknowledging the caller's needs were met, offering follow-up assistance, and closing with appropriate pleasantries. This prevents the abrupt endings that make callers feel dismissed. Raw infrastructure returns a response; what happens next is your problem.

Human Conversational Patterns Trillet adds processing for natural speech patterns: appropriate filler words, conversational acknowledgments, and response pacing that matches human dialogue. Raw API platforms optimize for speed, not for whether the caller feels like they're talking to a person.

These quality-of-life features add approximately 100-300ms to each response. Trillet made a deliberate architectural decision: prioritize caller experience over benchmark numbers. For real business calls, an agent that responds in 900ms but sounds natural outperforms an agent that responds in 600ms but feels robotic.

The threshold that matters is caller perception, not milliseconds. Research on conversational AI shows that responses under 1,500ms feel natural to most callers. Above that threshold, awkward pauses accumulate. Below 800ms provides no perceptible improvement—humans don't notice the difference between 600ms and 900ms in conversation.

Trillet optimizes for the 800ms-1,200ms range that delivers natural conversation while including the context that makes agents actually useful. Agencies using raw infrastructure platforms either accept robotic-sounding agents or spend engineering time replicating what Trillet includes out of the box.

What Factors Affect Voice AI Latency?

Several technical factors contribute to overall latency. Understanding these helps agencies troubleshoot issues and set realistic client expectations.

Speech-to-Text Processing (100-300ms) Transcription speed depends on the STT provider and audio quality. Background noise, accents, and poor phone connections all increase processing time.

LLM Inference (200-800ms) The AI model generates responses based on conversation context. Larger models with more capabilities typically have higher latency. Some platforms use smaller, faster models for simple responses and route complex queries to more capable models.

Text-to-Speech Generation (100-400ms) Converting the AI's text response to natural-sounding audio. Higher quality voices often require more processing time. Streaming TTS reduces perceived latency by starting playback before the full response is generated.

Network Round Trips (50-200ms) Data travels between the caller's phone, the platform's servers, and various AI service providers. Platforms with edge deployments and optimized routing have lower network latency.

Flow Builder Overhead (0-500ms) Platforms using visual flow builders add processing time as the system evaluates decision paths. Complex flows with many branches accumulate latency at each decision point.

How Should Agencies Test Latency Before Committing?

Before signing up for any white-label platform, run these tests to measure real-world performance:

Call during peak hours - Test between 9am-5pm local time when servers are under load

Test complex scenarios - Don't just test "what are your hours?" Ask multi-part questions that require context

Test from mobile phones - Many callers use cell networks with higher baseline latency

Test with background noise - Real calls include traffic, office chatter, and poor connections

Test over multiple days - Single tests don't reveal consistency issues

Record timestamps for when you finish speaking and when the AI begins responding. Average at least 10 calls across different scenarios.

What Latency Should Agencies Promise Clients?

Set realistic expectations with clients rather than overpromising based on best-case benchmarks.

Recommended Client SLA Targets:

Average response time: Under 1,200ms

95th percentile: Under 2,000ms

Maximum acceptable: Under 3,000ms

Building in buffer protects you from occasional spikes. If a platform advertises 600ms latency, expect 800-1,000ms in production with real traffic.

Document these expectations in your client contracts. When clients understand that sub-second latency isn't guaranteed on every call, they're less likely to complain about occasional delays.

How Does Trillet Achieve Consistent Latency?

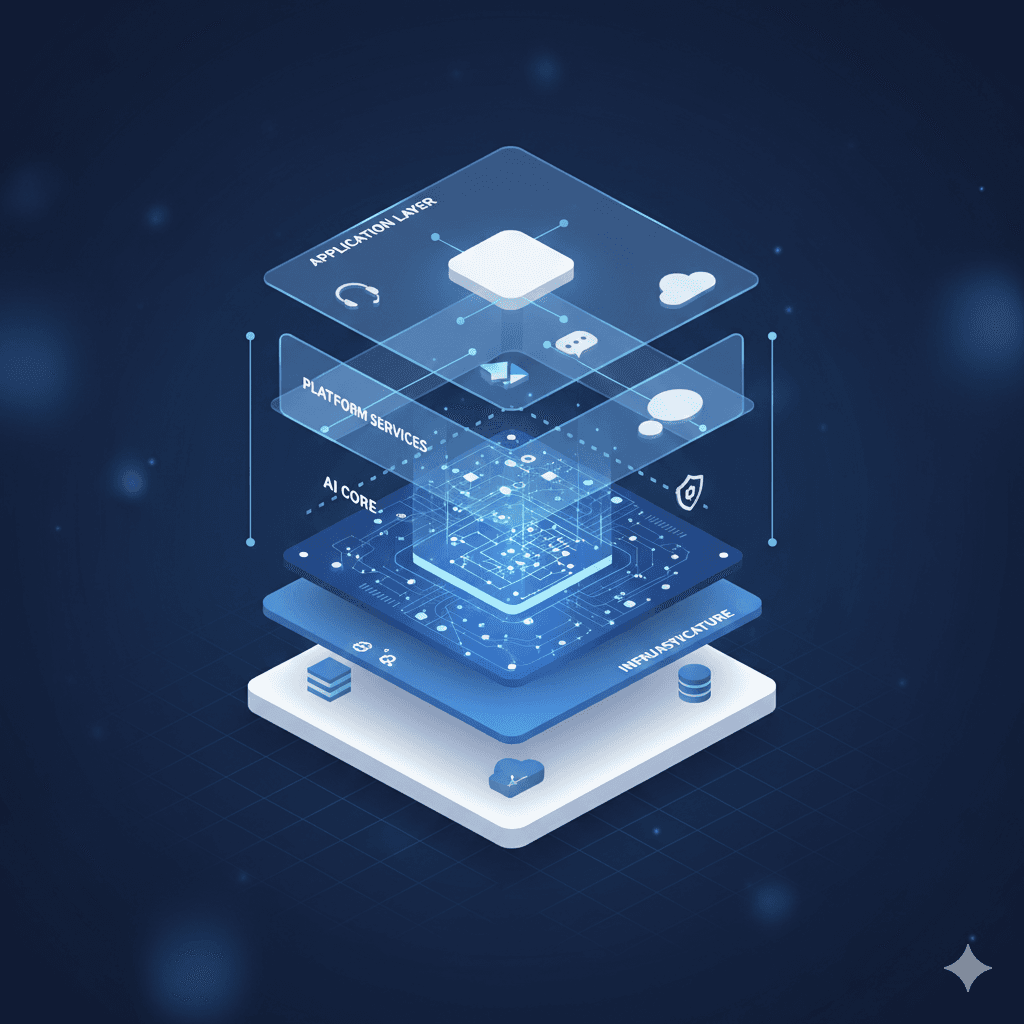

Trillet's architecture prioritizes consistent, predictable latency over chasing benchmark numbers that don't translate to better caller experience. Four design choices contribute to reliable performance:

Dynamic Conversation Architecture Instead of processing visual flow trees, Trillet's agents use dynamic conversation handling that doesn't accumulate latency at decision points. Complex conversations have the same latency profile as simple ones.

Built-In Conversation Intelligence Rather than shipping a raw API and leaving quality to developers, Trillet includes the processing overhead for natural conversations—temporal awareness, graceful endings, and human speech patterns. This adds milliseconds but eliminates the engineering burden of making agents sound professional.

Crews for Multi-Agent Handoffs When conversations require specialized knowledge, Trillet's Crews feature enables seamless handoffs between agents without the latency penalty of routing through flow builder logic.

Streaming Response Generation Trillet begins audio playback while still generating the full response, reducing perceived wait time even when total processing takes longer.

For agencies, this means fewer client complaints about "the AI pausing too long," more natural-sounding demos when closing sales, and no surprise engineering work to fix agents that technically work but sound robotic.

Frequently Asked Questions

What is considered good latency for voice AI?

Sub-800ms is excellent and feels natural to most callers. 800ms-1,200ms is acceptable for business calls. Above 1,500ms creates noticeable awkwardness that impacts caller experience.

Which Trillet product should I choose?

If you're a small business owner looking for AI call answering, start with Trillet AI Receptionist at $29/month. If you're an agency wanting to resell voice AI to clients, explore Trillet White-Label—Studio at $99/month (up to 3 sub-accounts) or Agency at $299/month (unlimited sub-accounts).

Why do visual flow builders have higher latency?

Flow builders evaluate decision trees sequentially at each conversation branch. Complex flows with many conditions accumulate processing time. A 10-step flow might add 300-500ms compared to dynamic architectures that evaluate context in parallel.

Can I improve latency on existing platforms?

Some optimizations help: simplify conversation flows, reduce knowledge base size, choose faster TTS voices, and minimize API integrations in the response path. However, fundamental architecture limits how much you can improve.

Should I include latency metrics in client dashboards?

Yes. Showing clients their average response times builds trust and helps identify issues before they escalate. Trillet's analytics dashboard includes latency metrics for transparency.

Conclusion

Latency separates voice AI that impresses callers from AI that frustrates them. For agencies, choosing a platform with consistent sub-1,200ms latency reduces client churn and support burden while making demos more convincing.

Trillet's dynamic architecture delivers reliable latency without the overhead of visual flow builders, making it a strong choice for agencies prioritizing caller experience. Explore Trillet White-Label pricing starting at $99/month for the Studio plan or $299/month for unlimited sub-accounts.

Related Resources: